查询速度慢的原因很多,常见如下几种

1、没有索引或者没有用到索引(这是查询慢最常见的问题,是程序设计的缺陷)

2、I/O吞吐量小,形成了瓶颈效应。

3、没有创建计算列导致查询不优化。

4、内存不足

5、网络速度慢

6、查询出的数据量过大(可以采用多次查询,其他的方法降低数据量)

7、锁或者死锁(这也是查询慢最常见的问题,是程序设计的缺陷)

8、sp_lock,sp_who,活动的用户查看,原因是读写竞争资源。

9、返回了不必要的行和列

10、查询语句不好,没有优化

可以通过如下方法来优化查询

1、把数据、日志、索引放到不同的I/O设备上,增加读取速度,以前可以将Tempdb应放在RAID0上,SQL2000不在支持。数据量(尺寸)越大,提高I/O越重要.

2、纵向、横向分割表,减少表的尺寸(sp_spaceuse)

3、升级硬件

4、根据查询条件,建立索引,优化索引、优化访问方式,限制结果集的数据量。注意填充因子要适当(最好是使用默认值0)。索引应该尽量小,使用字节数小的列建索引好(参照索引的创建),不要对有限的几个值的字段建单一索引如性别字段

5、提高网速;

6、扩大服务器的内存,Windows 2000和SQL server 2000能支持4-8G的内存。配置虚拟内存:虚拟内存大小应基于计算机上并发运行的服务进行配置。运行 Microsoft SQL Server? 2000 时,可考虑将虚拟内存大小设置为计算机中安装的物理内存的 1.5 倍。如果另外安装了全文检索功能,并打算运行 Microsoft 搜索服务以便执行全文索引和查询,可考虑:将虚拟内存大小配置为至少是计算机中安装的物理内存的 3 倍。将 SQL Server max server memory 服务器配置选项配置为物理内存的 1.5 倍(虚拟内存大小设置的一半)。

7、增加服务器CPU个数;但是必须明白并行处理串行处理更需要资源例如内存。使用并行还是串行程是MsSQL自动评估选择的。单个任务分解成多个任务, 就可以在处理器上运行。例如耽搁查询的排序、连接、扫描和GROUP BY字句同时执行,SQL SERVER根据系统的负载情况决定最优的并行等级,复杂的需要消耗大量的CPU的查询最适合并行处理。但是更新操作 Update,Insert,Delete还不能并行处理。

8、如果是使用like进行查询的话,简单的使用index是不行的,但是全文索引,耗空间。 like 'a%' 使用索引 like '%a' 不使用索引用 like '%a%' 查询时,查询耗时和字段值总长度成正比,所以不能用CHAR类型,而是VARCHAR。对于字段的值很长的建全文索引。

9、DB Server 和APPLication Server 分离;OLTP和OLAP分离

10、分布式分区视图可用于实现数据库服务器联合体。联合体是一组分开管理的服务器,但它们相互协作分担系统的处理负荷。这种通过分区数据形成数据库服务 器联合体的机制能够扩大一组服务器,以支持大型的多层 Web 站点的处理需要。有关更多信息,参见设计联合数据库服务器。(参照SQL帮助文件'分区视图')

a、在实现分区视图之前,必须先水平分区表

b、在创建成员表后,在每个成员服务器上定义一个分布式分区视图,并且每个视图具有相同的名称。这样,引用分布式分区视图名的查询可以在任何一个成员服务 器上运行。系统操作如同每个成员服务器上都有一个原始表的复本一样,但其实每个服务器上只有一个成员表和一个分布式分区视图。数据的位置对应用程序是透明 的。

11、重建索引 DBCC REINDEX ,DBCC INDEXDEFRAG,收缩数据和日志 DBCC SHRINKDB,DBCC SHRINKFILE. 设置自动收缩日志.对于大的数据库不要设置数据库自动增长,它会降低服务器的性能。 在T-sql的写法上有很大的讲究,下面列出常见的要点:首先,DBMS处理查询计划的过程是这样的:

1、 查询语句的词法、语法检查

2、 将语句提交给DBMS的查询优化器

3、 优化器做代数优化和存取路径的优化

4、 由预编译模块生成查询规划

5、 然后在合适的时间提交给系统处理执行

6、 最后将执行结果返回给用户其次,看一下SQL SERVER的数据存放的结构:一个页面的大小为8K(8060)字节,8个页面为一个盘区,按照B树存放。

12、Commit和rollback的区别 Rollback:回滚所有的事物。 Commit:提交当前的事物. 没有必要在动态SQL里写事物,如果要写请写在外面如: begin tran exec(@s) commit trans 或者将动态SQL 写成函数或者存储过程。

13、在查询Select语句中用Where字句限制返回的行数,避免表扫描,如果返回不必要的数据,浪费了服务器的I/O资源,加重了网络的负担降低性能。如果表很大,在表扫描的期间将表锁住,禁止其他的联接访问表,后果严重。

14、SQL的注释申明对执行没有任何影响

15、尽可能不使用光标,它占用大量的资源。如果需要row-by-row地执行,尽量采用非光标技术,如:在客户端循环,用临时表,Table变量,用 子查询,用Case语句等等。游标可以按照它所支持的提取选项进行分类: 只进 必须按照从第一行到最后一行的顺序提取行。FETCH NEXT 是唯一允许的提取操作,也是默认方式。可滚动性 可以在游标中任何地方随机提取任意行。游标的技术在SQL2000下变得功能很强大,他的目的是支持循环。

有四个并发选项

READ_ONLY:不允许通过游标定位更新(Update),且在组成结果集的行中没有锁。

OPTIMISTIC WITH valueS:乐观并发控制是事务控制理论的一个标准部分。乐观并发控制用于这样的情形,即在打开游标及更新行的间隔中,只有很小的机会让第二个用户更新 某一行。当某个游标以此选项打开时,没有锁控制其中的行,这将有助于最大化其处理能力。如果用户试图修改某一行,则此行的当前值会与最后一次提取此行时获 取的值进行比较。如果任何值发生改变,则服务器就会知道其他人已更新了此行,并会返回一个错误。如果值是一样的,服务器就执行修改。 选择这个并发选项OPTIMISTIC WITH ROW VERSIONING:此乐观并发控制选项基于行版本控制。使用行版本控制,其中的表必须具有某种版本标识符,服务器可用它来确定该行在读入游标后是否有 所更改。

在 SQL Server 中,这个性能由 timestamp 数据类型提供,它是一个二进制数字,表示数据库中更改的相对顺序。每个数据库都有一个全局当前时间戳值:@@DBTS。每次以任何方式更改带有 timestamp 列的行时,SQL Server 先在时间戳列中存储当前的 @@DBTS 值,然后增加 @@DBTS 的值。如果某 个表具有 timestamp 列,则时间戳会被记到行级。服务器就可以比较某行的当前时间戳值和上次提取时所存储的时间戳值,从而确定该行是否已更新。服务器不必比较所有列的值,只需 比较 timestamp 列即可。如果应用程序对没有 timestamp 列的表要求基于行版本控制的乐观并发,则游标默认为基于数值的乐观并发控制。

SCROLL LOCKS 这个选项实现悲观并发控制。在悲观并发控制中,在把数据库的行读入游标结果集时,应用程序将试图锁定数据库行。在使用服务器游标时,将行读入游标时会在其 上放置一个更新锁。如果在事务内打开游标,则该事务更新锁将一直保持到事务被提交或回滚;当提取下一行时,将除去游标锁。如果在事务外打开游标,则提取下 一行时,锁就被丢弃。因此,每当用户需要完全的悲观并发控制时,游标都应在事务内打开。更新锁将阻止任何其它任务获取更新锁或排它锁,从而阻止其它任务更 新该行。

然而,更新锁并不阻止共享锁,所以它不会阻止其它任务读取行,除非第二个任务也在要求带更新锁的读取。滚动锁根据在游标定义的 Select 语句中指定的锁提示,这些游标并发选项可以生成滚动锁。滚动锁在提取时在每行上获取,并保持到下次提取或者游标关闭,以先发生者为准。下次提取时,服务器 为新提取中的行获取滚动锁,并释放上次提取中行的滚动锁。滚动锁独立于事务锁,并可以保持到一个提交或回滚操作之后。如果提交时关闭游标的选项为关,则 COMMIT 语句并不关闭任何打开的游标,而且滚动锁被保留到提交之后,以维护对所提取数据的隔离。所获取滚动锁的类型取决于游标并发选项和游标 Select 语句中的锁提示。

锁提示 只读 乐观数值 乐观行版本控制 锁定无提示 未锁定 未锁定 未锁定 更新 NOLOCK 未锁定 未锁定 未锁定 未锁定 HOLDLOCK 共享 共享 共享 更新 UPDLOCK 错误 更新 更新 更新 TABLOCKX 错误 未锁定 未锁定 更新其它 未锁定 未锁定 未锁定 更新 *指定 NOLOCK 提示将使指定了该提示的表在游标内是只读的。

16、用Profiler来跟踪查询,得到查询所需的时间,找出SQL的问题所在;用索引优化器优化索引

17、注意UNion和UNion all 的区别。UNION all好

18、注意使用DISTINCT,在没有必要时不要用,它同UNION一样会使查询变慢。重复的记录在查询里是没有问题的

19、查询时不要返回不需要的行、列

20、用sp_configure 'query governor cost limit'或者SET QUERY_GOVERNOR_COST_LIMIT来限制查询消耗的资源。当评估查询消耗的资源超出限制时,服务器自动取消查询,在查询之前就扼杀掉。 SET LOCKTIME设置锁的时间

21、用select top 100 / 10 Percent 来限制用户返回的行数或者SET ROWCOUNT来限制操作的行

22、在SQL2000以前,一般不要用如下的字句: "IS NULL", "<>", "!=", "!>", "!<", "NOT", "NOT EXISTS", "NOT IN", "NOT LIKE", and "LIKE '%500'",因为他们不走索引全是表扫描。也不要在Where字句中的列名加函数,如Convert,substring等,如果必须用函数的时候, 创建计算列再创建索引来替代.还可以变通写法:Where SUBSTRING(firstname,1,1) = 'm'改为Where firstname like 'm%'(索引扫描),一定要将函数和列名分开。并且索引不能建得太多和太大。NOT IN会多次扫描表,使用EXISTS、NOT EXISTS ,IN , LEFT OUTER JOIN 来替代,特别是左连接,而Exists比IN更快,最慢的是NOT操作.如果列的值含有空,以前它的索引不起作用,现在2000的优化器能够处理了。相同 的是IS NULL,“NOT", "NOT EXISTS", "NOT IN"能优化她,而”<>”等还是不能优化,用不到索引。

23、使用Query Analyzer,查看SQL语句的查询计划和评估分析是否是优化的SQL。一般的20%的代码占据了80%的资源,我们优化的重点是这些慢的地方。

24、如果使用了IN或者OR等时发现查询没有走索引,使用显示申明指定索引: Select * FROM PersonMember (INDEX = IX_Title) Where processid IN (‘男’,‘女’)

25、将需要查询的结果预先计算好放在表中,查询的时候再Select。这在SQL7.0以前是最重要的手段。例如医院的住院费计算。

26、MIN() 和 MAX()能使用到合适的索引

27、数据库有一个原则是代码离数据越近越好,所以优先选择Default,依次为Rules,Triggers, Constraint(约束如外健主健CheckUNIQUE……,数据类型的最大长度等等都是约束),Procedure.这样不仅维护工作小,编写程 序质量高,并且执行的速度快。

28、如果要插入大的二进制值到Image列,使用存储过程,千万不要用内嵌Insert来插入(不知JAVA是否)。因为这样应用程序首先将二进制值转 换成字符串(尺寸是它的两倍),服务器受到字符后又将他转换成二进制值.存储过程就没有这些动作: 方法:Create procedure p_insert as insert into table(Fimage) values (@image), 在前台调用这个存储过程传入二进制参数,这样处理速度明显改善。

29、Between在某些时候比IN速度更快,Between能够更快地根据索引找到范围。用查询优化器可见到差别。 select * from chineseresume where title in ('男','女') Select * from chineseresume where between '男' and '女' 是一样的。由于in会在比较多次,所以有时会慢些。

30、在必要是对全局或者局部临时表创建索引,有时能够提高速度,但不是一定会这样,因为索引也耗费大量的资源。他的创建同是实际表一样。

31、不要建没有作用的事物例如产生报表时,浪费资源。只有在必要使用事物时使用它。

32、用OR的字句可以分解成多个查询,并且通过UNION 连接多个查询。他们的速度只同是否使用索引有关,如果查询需要用到联合索引,用UNION all执行的效率更高.多个OR的字句没有用到索引,改写成UNION的形式再试图与索引匹配。一个关键的问题是否用到索引。

33、尽量少用视图,它的效率低。对视图操作比直接对表操作慢,可以用stored procedure来代替她。特别的是不要用视图嵌套,嵌套视图增加了寻找原始资料的难度。我们看视图的本质:它是存放在服务器上的被优化好了的已经产生 了查询规划的SQL。对单个表检索数据时,不要使用指向多个表的视图,直接从表检索或者仅仅包含这个表的视图上读,否则增加了不必要的开销,查询受到干 扰.为了加快视图的查询,MsSQL增加了视图索引的功能。

34、没有必要时不要用DISTINCT和ORDER BY,这些动作可以改在客户端执行。它们增加了额外的开销。这同UNION 和UNION ALL一样的道理。 Select top 20 ad.companyname,comid,position,ad.referenceid,worklocation, convert(varchar(10),ad.postDate,120) as postDate1,workyear,degreedescription FROM jobcn_query.dbo.COMPANYAD_query ad where referenceID in('JCNAD00329667','JCNAD132168','JCNAD00337748','JCNAD00338345','JCNAD00333138','JCNAD00303570', 'JCNAD00303569','JCNAD00303568','JCNAD00306698','JCNAD00231935','JCNAD00231933','JCNAD00254567', 'JCNAD00254585','JCNAD00254608','JCNAD00254607','JCNAD00258524','JCNAD00332133','JCNAD00268618', 'JCNAD00279196','JCNAD00268613') order by postdate desc

35、在IN后面值的列表中,将出现最频繁的值放在最前面,出现得最少的放在最后面,减少判断的次数

36、当用Select INTO时,它会锁住系统表(sysobjects,sysindexes等等),阻塞其他的连接的存取。创建临时表时用显示申明语句,而不是 select INTO. drop table t_lxh begin tran select * into t_lxh from chineseresume where name = 'XYZ' –commit 在另一个连接中Select * from sysobjects可以看到 Select INTO 会锁住系统表,Create table 也会锁系统表(不管是临时表还是系统表)。所以千万不要在事物内使用它!!!这样的话如果是经常要用的临时表请使用实表,或者临时表变量。

37、一般在GROUP BY 个HAVING字句之前就能剔除多余的行,所以尽量不要用它们来做剔除行的工作。他们的执行顺序应该如下最优:select 的Where字句选择所有合适的行,Group By用来分组个统计行,Having字句用来剔除多余的分组。这样Group By 个Having的开销小,查询快.对于大的数据行进行分组和Having十分消耗资源。如果Group BY的目的不包括计算,只是分组,那么用Distinct更快

38、一次更新多条记录比分多次更新每次一条快,就是说批处理好

39、少用临时表,尽量用结果集和Table类性的变量来代替它,Table 类型的变量比临时表好

40、在SQL2000下,计算字段是可以索引的,需要满足的条件如下:

a、计算字段的表达是确定的

b、不能用在TEXT,Ntext,Image数据类型

c、必须配制如下选项 ANSI_NULLS = ON, ANSI_PADDINGS = ON, …….

41、尽量将数据的处理工作放在服务器上,减少网络的开销,如使用存储过程。存储过程是编译好、优化过、并且被组织到一个执行规划里、且存储在数据库中的 SQL语句,是控制流语言的集合,速度当然快。反复执行的动态SQL,可以使用临时存储过程,该过程(临时表)被放在Tempdb中。以前由于SQL SERVER对复杂的数学计算不支持,所以不得不将这个工作放在其他的层上而增加网络的开销。SQL2000支持UDFs,现在支持复杂的数学计算,函数 的返回值不要太大,这样的开销很大。用户自定义函数象光标一样执行的消耗大量的资源,如果返回大的结果采用存储过程

42、不要在一句话里再三的使用相同的函数,浪费资源,将结果放在变量里再调用更快

43、Select COUNT(*)的效率教低,尽量变通他的写法,而EXISTS快.同时请注意区别: select count(Field of null) from Table 和 select count(Field of NOT null) from Table 的返回值是不同的。

44、当服务器的内存够多时,配制线程数量 = 最大连接数+5,这样能发挥最大的效率;否则使用 配制线程数量<最大连接数启用SQL SERVER的线程池来解决,如果还是数量 = 最大连接数+5,严重的损害服务器的性能。

45、按照一定的次序来访问你的表。如果你先锁住表A,再锁住表B,那么在所有的存储过程中都要按照这个顺序来锁定它们。如果你(不经意的)某个存储过程中先锁定表B,再锁定表A,这可能就会导致一个死锁。如果锁定顺序没有被预先详细的设计好,死锁很难被发现

46、通过SQL Server Performance Monitor监视相应硬件的负载 Memory: Page Faults / sec计数器如果该值偶尔走高,表明当时有线程竞争内存。如果持续很高,则内存可能是瓶颈。 Process:

1、% DPC Time 指在范例间隔期间处理器用在缓延程序调用(DPC)接收和提供服务的百分比。(DPC 正在运行的为比标准间隔优先权低的间隔)。 由于 DPC 是以特权模式执行的,DPC 时间的百分比为特权时间 百分比的一部分。这些时间单独计算并且不属于间隔计算总数的一部 分。这个总数显示了作为实例时间百分比的平均忙时。

2、%Processor Time计数器 如果该参数值持续超过95%,表明瓶颈是CPU。可以考虑增加一个处理器或换一个更快的处理器。

3、% Privileged Time 指非闲置处理器时间用于特权模式的百分比。(特权模式是为操作系统组件和操纵硬件驱动程序而设计的一种处理模式。它允许直接访问硬件和所有内存。另一种模 式为用户模式,它是一种为应用程序、环境分系统和整数分系统设计的一种有限处理模式。操作系统将应用程序线程转换成特权模式以访问操作系统服务)。 特权时间的 % 包括为间断和 DPC 提供服务的时间。特权时间比率高可能是由于失败设备产生的大数量的间隔而引起的。这个计数器将平均忙时作为样本时间的一部分显示。

4、% User Time表示耗费CPU的数据库操作,如排序,执行aggregate functions等。如果该值很高,可考虑增加索引,尽量使用简单的表联接,水平分割大表格等方法来降低该值。 Physical Disk: Curretn Disk Queue Length计数器该值应不超过磁盘数的1.5~2倍。要提高性能,可增加磁盘。 SQLServer:Cache Hit Ratio计数器该值越高越好。如果持续低于80%,应考虑增加内存。 注意该参数值是从SQL Server启动后,就一直累加记数,所以运行经过一段时间后,该值将不能反映系统当前值。

47、分析select emp_name form employee where salary > 3000 在此语句中若salary是Float类型的,则优化器对其进行优化为Convert(float,3000),因为3000是个整数,我们应在编程时使 用3000.0而不要等运行时让DBMS进行转化。同样字符和整型数据的转换。

[优化]ASP.NET缓存机制

[优化]使用Google Page Speed优化Web前端性能

关于项目开发者

项目的开发者基本上是Google的工程师,这里需要提到的一个人:Steve Souders。他曾经效力于Yahoo,是YSlow项目的开发者之一,而且还是Firebug Work Group 成员之一。他一直以来致力于高性能Web应用领域。更加有趣的是,在Stanford CS系就开了这么一门课:High Performance Web Sites (CS193H) 。另外,他还写了两本书,都在O’reilly出版,分别是High Performance Web Sites 与 Even Faster Web Sites。

Page Speed是什么

Page Speed是Firefox的扩展,准确地说是Firebug的扩展。Firebug的强大功能我已经介绍过了,Page Speed就是对其进行了进一步扩展,集成的功能包括:

- 页面性能综合分析,可以针对页面提供综合报告和建议

- 内置JavaScript以及图片优化,包括JS Minify

- 改进的资源请求显示,对于Firebug Net模块的改进显示

- 页面请求活动视图,以直观的图标方式显示各请求的加载时间顺序以及每个请求各部分的时间消耗,开发人员可以根据这些数据找到性能的瓶颈

- JS性能优化,可以分析出未被调用的以及可以延迟调用的函数

安装

首先,需要安装Firebug,然后在这里进行安装。基本要求如下:

- Mozilla Firefox 3.0.4及以上

- Firebug 1.3.3及以上

使用

安装好以后,打开Firebug,可以看到新增的两个标签页:Page Speed与Page Speed Activity,如下图所示:

其中,Page Speed标签页包括两个功能:Analyze Performance与Show Resources,其中Analyze Performance是Page Speed的核心功能。点击以后Page Speed开始工作,几秒钟以后就会得出一份详细的性能分析报告:

其中各项按照重要性进行排序,展开每一部分,可以得到详细的报告。其中,红色图标表示未进行优化,黄色表示可以进行进一步优化,绿色表示已经进行优化。

其余部分的功能可以在Google Code的官方主页上找到,这里就不赘述了,只重点介绍Analyze Performance这一功能。

性能优化技巧

其实上图的每一项都是Page Speed提供的优化标准,Page Speed就是按照这一条条标准进行分析的。需要拿出来讲的包括:

使用gzip压缩

这里放在第一,是性能优化效果最显著的一步。所谓gzip压缩是一种开发的压缩算法,目前的主流浏览器(Firefox, Safari, Chrome, IE4及以上)与主流服务器(Apache, Lighttpd, Nginx)均对其有很好的支持。gzip压缩是通过HTTP 1.1协议中的Content-Encoding : gzip来进行标记说明,其可以明显减少文本文件的大小,从而节省带宽和加载时间。我做过的一个实验,发现启用gzip后,JQuery 1.2.6 minify版本的大小从54.4k减少到16k,减少了70%。gzip适用的情况包括:

- HTML\CSS\JavaScript文件,gzip算法对于文本文件的效率比较高,而jpg/gif/png/pdf等二进制文件本身已经进 行了一次压缩,再使用gzip的成效已经不明显了。而且gzip压缩需要消耗服务器的资源,而解压缩需要消耗浏览器的资源,对于比较大的二进制文件具有非 常高的性能消耗;

- 尽量使用一种大小写方式,要么全部大写,要么全部小写。学过数据结构和算法的同学一定知道压缩其本身就是对冗余信息熵进行压缩,如何数据原素的类型种类太多,其信息冗余度会降低,从而压缩率降低;

- 过小的文件(通常小于150个字节)不宜进行gzip压缩,因为gzip会在文件头加入相关信息,对于小文件反而会增加文件的长度;

关于各服务器如何启用gzip,可以参加相关文档说明。

如何检查gzip是否启用?使用Firebug,在Net模块中进行检查HTTP Header是否有Content-Encoding gzip标记,参见下图:

最小化JS和图片

对于JavaScript文件本身具有非常大的优化空间。所谓JavaScript压缩,就是通过一些工具将函数、变量名进行优化(其实就是尽可能 缩短变量名长度),消除多余字符(比如空格、换行符、注释等),最终得到的代码可以在分析和执行上得到性能提升。压缩后得到的代码对于机器而言是可读的, 对于人来说就不行了,因为文件内容已经面目全非。所以压缩一般用于生产期的代码,不能使用于开发期。

同样的道理,图片内容中也有一定的冗余信息,比如文件头部的一些内容描述(这些内容在jpg)图片上尤其如此。通过一定的工具(比如GIMP)可以去除这些信息,从而节省一定的空间。

幸运的是,Page Speed已经内置了这些功能,我们不需要找第三方的工具。如下图所示,可以看到对JS文件进行最小化可以得到的预期效果:

比如JQuery.form.js,最小化后减少11.9kb,减少54.8%的空间。点击minified version,在新窗口中可以看到Page Speed为你优化好的版本,直接更新到服务器就可以了。

关于图片优化,操作方法同上。

启用浏览器缓存

这是经常使用的方法。当请求的资源在浏览器本地得到缓存后,第二次请求这些内容就可以从直接缓存中取出,减少了连线的HTTP请求。

HTTP 1.1提供的缓存方法主要有两种:

- Expires and Cache-Control: max-age. 即内容在缓存中的生命有效期。第一次请求后,在生命有效期之内的后期请求直接从本地缓存中取,不过问服务器;

- Last-Modified and ETag. 其中Last-Modified标记文件最后一次修改的时间,浏览器第二次请求是在头部加入上次请求缓存下来的Last-Modified时间,如何两次 请求期间服务器的内容没有进行修改,服务器直接返回304 Not Modified,浏览器接到以后直接使用本地缓存。否则,服务器会返回200以及更新后的版本。ETag是服务器对于文件生成的Hash散列,其生成算 法与最后一次修改的时间相关。浏览器第二次请求发送上次的ETag信息,服务器通过简单的比对就知道是否应该返回304还是200。

关于各缓存头部的设置可以参考各服务器的相关文档。

JavaScript延迟加载

通常浏览器在解析HTML时遇到JS文件会先下载,解析执行后才会下载后面的内容,期间自然会造成一定的延时。为了提高性能,尽可能将JS文件的位 置后移,如果可能,还可以通过部分代码进行异步加载。另外,对于JS和CSS在必须放置在一起情况,需要报JS放置在CSS之后,这样CSS与JS文件可 以同步下载。

文件拼接

这里主要包括JS/CSS等文本文件和图片。对于文本文件,尽可能将同一类型放置到一个文件中,减少HTTP请求。对于CSS背景图片,可以使用 Sprit技术将图片拼接到一起,然后使用background-position属性选择对应的图片。Google首页上的这个图片就是一个很好的例 子:

其它

更多优化规则,可以参考Page Speed的说明以及Steve Souders个人主页上的相关信息。

结论

虽然现在网络速度越来越快,Web前端优化仍然非常重要;永远不要假设用户的网络速度和你一样快,毕竟由于ISP的各方面原因,各地的速度大不相同。良好的策略可以在有限的带宽资源下达到最大的性能发挥。

[SQL]SQL Server性能优化与数据库重构日志

最近网站的访问太慢,主要原因有以下三点:

- 数据库设计不合理,关联表太多造成查询速度慢

- 数据库视图嵌套太多直接影响性能

- 数据库索引除主键索引外几乎没有

- ORM映射框架没有缓存机制,利用反射

- 通用查询存储过程性能问题

- Controller层的代码编写效率低下,需要重构

- View层异步操作太多

总结了以上几点后,决定花时间彻底清查旧账进行数据到表示层的重构,首先从数据库下手,利用SQL Server的Profile工具对现有数据库操作的监控,并利用SQL Server优化引擎对监控结果进行了分析,分析结果如下:

1.工作负荷分析报告:

| 语句类型 | 语句数 | 开销降低 | 开销增加 | 未更改 |

| Select | 833 | 449 | 1 | 383 |

| Update | 55 | 3 | 3 | 49 |

| Insert | 395 | 303 | 34 | 58 |

通过上述统计可以看出大部分还是查询的操作比较多,至于Insert也是因为通用查询存储过程中利用Insert INTO表变量进行了分页造成的,现在的瓶颈应该基本集中在查询存储过程以及表间关系复杂度上,造成left JOIN和Inner JOIN 特别是UNION ALL 表以及视图的联合更加耗费资源和性能,因此利用数据引擎优化工具,分析后的索引方案创建了部分索引以及索引统计,提高了查询性能并不是很明显。

2009-07-18 调整数据库中表的关系,尽量去除表间关系,减少不必要的多表查询操作

1.论坛表结构调整:

目前,论坛速度慢的主要原因是因为利用视图联合了信息内容和论坛本身的帖子导致查询速度慢,具体表关系如下图:

可见,Tang_BBSInfo表的设计不符合数据库范式:一个字段只有一种含义。InformationID同时存储了3个表的

Id,需求是图片信息的标题和描述 视频信息的标题和描述 新闻信息的标题和内容都要作为帖子发布到论坛,同时整站在上述三处发布评论,都作为论坛该帖子的回复显示到论坛,根据这个需求设计的表结构如下:

| Title | Note | InformationID | ModuleID |

| 论坛帖子 | 帖子内容 | 0 | 50(论坛) |

| NULL | NULL | 123002 | 52(图片) |

| NULL | NULL | 2323 | 53(视频) |

| NULL | NULL | 232322 | 9(新闻) |

可见title和note来源非论坛的都为NULL值,完全凭借InformationID+ModuleID来区别是来自图片、视频还是新闻,这样查询论坛帖子的时候需要UNION 3个表的数据,特别是UNION严重影响性能,目前信息才几百条就已经显示超时了。

更新Tang_bbsInfo的帖子标题和内容为信息的标题和内容,就不用再关联信息表(数据量大的表)这样就可以减少查询时间,另外页面上显示直接查询Tang_BbsInfo表即可

[SQL]优化SQL Server数据访问10个步骤四

Introduction

Imagine you are a doctor, or a physician. What do you do when one of your patients arrive feeling out of sorts and fallen ill?

You try to understand the cause of his/her illness right? Yes. This is the most important thing to do first. Because, in order to cure your patient, you need to find out what causes your patient to fall ill. Most of the cases you study the symptoms and based upon your knowledge and experience you suggest a treatment, which works most of the cases.

But, you may not be lucky in all cases. Some patients do have complex problems with multiple types of illnesses. Studying the symptoms alone is not sufficient in these cases. You suggest diagnosing the problems and do prescribe one or more tests to be done. Pathologists then collect samples from the patient and start diagnosing for finding out the causes of the illness. Once you get the testing report, you are in a better position in understanding the problem that caused the patient’s illness and you are most likely to prescribe the correct treatment plan to cure.

Hm..sounds a familiar situation. Isn’t this the same thing we have to do while trying to Debug or troubleshoot any problem in our software systems?

Yes it is. So, while we were actually trying to optimize our data access operations, it’s time for us to learn how to diagnose different performance and related problems in SQL Server database. Take a look at the following articles to learn the step by step process that we’ve already carried out so far.

Top 10 steps to optimize data access in SQL Server. Part I (Use Indexing)

Top 10 steps to optimize data access in SQL Server. Part II (Re-factor TSQLs and apply best practices)

Top 10 steps to optimize data access in SQL Server. Part III (Apply advanced indexing and denormalization)

As you might have seen already, we have gone through 7 optimization steps so far. So let us proceed to step 8 now:

Step8: Diagnose performance problem, use SQL Profiler and Performance Monitoring tool effectively.

The SQL Profiler tool is perhaps the most well-known performance troubleshooting tool in the SQL server arena. Most of the cases, when a performance problem is reported, this is the first tool that you are going to launch to investigate the problem.

As you perhaps already know, the SQL Profiler is a graphical tool for tracing and monitoring the SQL Server instance, mostly used for profiling and measuring performance of the TSQLs that are executed on the database server. You can capture about each event on the server instance and save event data to a file or table to analyze later. For example, if the production database perform slowly, you can use the SQL Profiler to see which stored procedures are taking too much time to execute.

Basic use of SQL Profiler tool

There is a 90% chance that you already know how to use it. But, I assume lots of newbie’s out there reading this article might feel good if there is a section on basic usage of SQL Profiler (If you know this tool already, just feel free to skip this section). So, here we put a brief section:

Start working with the SQL Profiler in the following way

- Launch the SQL Profiler (Tools->SQL Server Profiler in the Management Studio) and connect it to the desired SQL Server instance. Select a new trace to be created (File->New Trace) and select a trace template (A trace template is a template where some pre-selected events and columns are selected to be traced).

Figure: Trace template

- Optionally, select particular events (Which should be captured in the trace output) and select/deselect columns (To specify the information you want to see in the trace output).

Figure : Select events to be captured for tracing

- Optionally, organize columns (Click the “Organize Columns” button) to specify the order of their appearance in the trace. Also, specify column filter values to filter the event data which you are interested in. For example, click on the “Column Filters and specify the database name value (In the “Like” text box) to trace events only for the specified database. Please note that, filtering is important because, SQL profiler would otherwise capture all unnecessary events and trace too many information that you might find difficult to deal with.

Figure : Filter column values

- Run the profiler (By clicking the green Play button) and wait for the events to be captured on the trace.

Figure : Running profiler

- When enough information is traced, stop the profiler (By pressing the red Stop icon) and save the trace either into a trace file or into an SQL Server table (You have to specify a table name and the SQL Server profiler would create the table with necessary fields and store all tracing records inside it).

Figure : Storing profiler trace data into table

- If the trace is saved on a table, issue a query to retrieve the expensive TSQL’s using a query like the following :

Select TextData,Duration,…, FROM Table_Name ORDER BY

Duration DESC

Figure : Querying for most expensive TSQL/Stored procedure

Voila! You just identified the most expensive TSQLs in your application in a quick time.

Effective use of SQL Profiler to troubleshot performance related problems

Most of the cases, the SQL profiler tool is used to trace the most expensive TSQLs/Stored Procedures in the target database to find the culprit one that is responsible for performance problem (Described above). But, the tool is not limited to provide only TSQL duration information. You can use many powerful features of this tool to diagnose and troubleshoot different kinds of problems that could occur due to many possible reasons.

When you are running the SQL Profiler, there are two possibilities. Either you have a reported performance related issue that you need to diagnose, or, you need to diagnose any possible performance issue in advance so that you can make sure you system would perform blazing fast in the production after deployment.

Following are some tips that you can follow while using the SQL Profiler tool:

-

Use existing templates, but, create your own template when in need.

Most of the times the existing templates will serve your purpose. But still, there could be situations when you will need a customized template for diagnosing a specific kind of problem in the database server (Say, Deadlock occurring in the production server). In this situation, you can create a customized template using FileàTemplatesàNew Template and specifying the Template name and events and columns. Also, you can select an existing template and modify it according to your need.

Figure : Creating a new template

Figure : Specifying events and columns for the new template

-

Capture TableScan or DeadLock events

Did you know that you can listen to these two interesting events using the SQL profiler?

Imagine a situation where you have done all possible indexing in your test database, and after testing, you have implemented the indexes in the production server. Now suppose, for some unknown reasons, you are not getting the desired performance in the production database. You suspect that, some undesired table scanning is taking place while executing one of the queries. You need to detect the table scan and get rid of it, but, how could you investigate this?

Another situation. Suppose, you have a deployed system where error mails are being configured to be sent to a pre-configured email address (So that, the development team can be notified instantly and with enough information to diagnose the problem). All on a sudden, you start getting error mails stating that deadlocks are occurring in the database (With exception message from database containing database level error codes). You need to investigate and find the situation and corresponding set of TSQLs that are responsible for creating the deadlock in the production database. How would you carry this out?

SQL profiler gives you possible ways to investigate it. You can edit the templates so that, the profiler listens for any Table scan or deadlock event that might take place in the database. To do this, check the Deadlock Graph, Deadlock and DeadLock chain events in the DeadLock section while creating/editing the tracing template. Then, start the profiler and run your application. Sooner or later when any table scan or deadlock occurs in the database, the corresponding events would be captured in the profiler trace and you would be able to find out the corresponding TSQLs that are responsible for the above described situation. Isn’t that nice?

Note: You might also require the SQL Server log file to write deadlock events so that you can get important context information from the log when the deadlock took place. This is important because, sometimes you need to combine the SQL Server deadlock trace information with that of the SQL Server log file to detect the involved database objects and TSQLs that are causing deadlocks.

Figure : Detecting Table scan

Figure : Detecting Deadlocks

-

Create Replay trace

As you already know, in order to troubleshoot any performance problem in the production database server, you need to try to simulate the same environment (Set of queries, number of connections in a given time period that are executed in the production database) in your Test database server first so that, the performance problem can be re-generated (Without re-generating the problem, you can’t fix it, right?). How can you do this?

The SQL Profiler tool lets you do this by using a Replay trace. You can use a TSQL_Replay Trace template to capture events in the production server and save that trace in a .trace file. Then, you can replay the trace on test server to re-generate and diagnose problems.

Figure : Creaging Replay trace

To learn more about TSQL Replay trace, see http://msdn.microsoft.com/en-us/library/ms189604.aspx

-

Create Tuning trace

The Database tuning advisor is a great tool that can give you good tuning suggestions to enhance your database performance. But, to get a good and realistic suggestion from the tuning advisor, you need to provide the tool with “appropriate load” that is similar to the production environment. That is, you need to execute the same the set of TSQL’s and open the same number of concurrent connections in the test server and then run the tuning advisor there. The SQL Profiler lets you capture the appropriate set of events and columns (for creating load in the tuning advisor tool) by the Tuning template. Run the profiler using the Tuning template, capture the traces and save it. Then, use the tuning trace file for creating load in the test server by using the Tuning advisor tool.

You would like to learn and use the Database tuning advisor to get tuning suggestions while you try to troubleshoot performance issues in SQL Server. Take a look at this article to learn this interesting tool : http://msdn.microsoft.com/en-us/library/ms166575.aspx

Figure : Create Tuning profiler trace

-

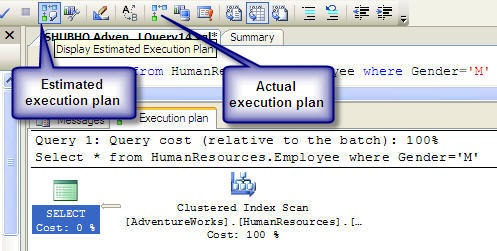

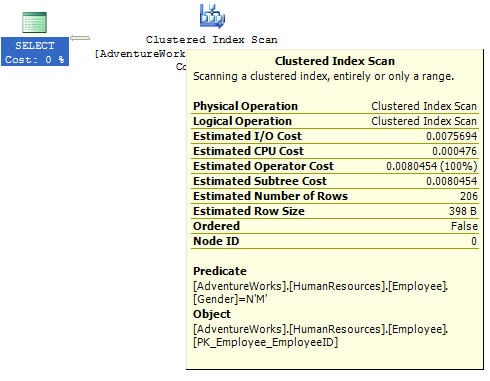

Capture ShowPlan to include SQL execution plans in the profiler.

There will be times when the same query will give you different performance in the Production and Test server. Suppose, you have been reported with this kind of a problem and to investigate the performance problem, you need to take a look at the TSQL execution plan that is being used in the Production server for executing the actual Query.

Now, it is obvious that, you just cannot run that TSQL (That is causing performance problem) in the production server to view the actual execution plan for lots of reasons. You can of course take a look at the estimated execution plan for a similar query, but, this execution plan might not reflect you the true execution plan that is used in reality in a fully loaded production database.The SQL Profiler can help you in this regard. You can include ShowPlan, or, ShowPlan XML in your trace while profiling in the Production server. Doing this would capture SQL plans along with the TSQL text while tracing. Do this in the test server too and analyze and compare both execution plans to find out the difference in them very easily.

Figure : Specifying Execution plans to be included in the trace

Figure : Execution plan in the profiler trace

Use Performance monitoring tool (Perfmon) to diagnose performance problems

When you encounter performance related problems in your database, the SQL Profiler would enable you to diagnose and find out the reasons behind the performance issues most of the cases. But, sometimes the Profiler alone cannot help you identifying the exact cause of the problems.

For example, analyzing the query execution time using the Profiler in the production server you’ve seen that, the corresponding TSQL is executing slowly (Say, 10 seconds), though, the same query takes a much lower time in the Test server (Say, 200 ms). You analyzed the query execution plans and data volume and found those to be roughly the same. So there must have been some other issues that are creating a bottleneck situation in the production server. How would you diagnose this problem then?

The Performance Monitoring Tool (Known as Perfmon) comes to your aid in these kinds of situations. Performance Monitor is a tool (That is built in within the Windows OS) gathers statistical data related to hardware and software metrics from time to time.

When you issue a TSQL to execute in the database server, there are many stakeholders participating in the actions to execute the query and return result. These include the TSQL Execution engine, Server buffer cache, SQL Optimizer, Output queue, CPU, Disk I/O and lots of other things. So, if one of these does not perform its corresponding task well and fast, the ultimate query execution time taken by the database server would be high. Using the Performance Monitoring tool you can take a microscopic look at the performance of these individual components and identify the root cause of the performance problem.

With the Performance Monitoring tool (System monitor) you can create a counter log including different built in counters (That measures performance of each individual components while executing the queries) and analyze the counter log with a graphical view to understand what’s going on in detail. Moreover, you can combine the Performance counter log with the SQL Profiler trace for a certain period of time to better understand the complete situation while executing a query.

Basic use of Performance Monitor

Windows has lots of built in objects with their corresponding performance counters. These are installed when you install the Windows. While the SQL Server gets installed, Performance counters for SQL server also get installed. Hence, these counters are available when you define a performance counter log.

Follow these steps to create a performance counter log:

- Launch the Performance Monitor tool from Tools->Performance Monitor in the SQL profiler tool

Figure : Launch Performance Monitor tool

- Create a new Performance Counter log by clicking on the Counter Logs->New Log Settings

Figure : Create a Performance counter log

Specify log file name and press OK.

Figure : Specify name for the Performance coutner log

- Click on the “Add Counters” button to select the preferred counters in the newly created counter log.

Figure : Add counters for the Performane counter log

- Add the preferred counters by selecting desired objects and their corresponding counters from the list. Click on “Close” when done.

Figure : Specify objects and corresponding counters

- The selected counters will be displayed in the form

Figure : Specify counters

- Click on the Log Files tab and click on the “Configure” tab to specify the log file location and modify log file name if required. Click “OK” when done.

Figure : Specify Performance counter log location

- Click on the “Schedule” tab to specify a schedule for reading the counter information and write in the log file. Optionally, you can also select “Manually” for “Start log” and “Stop log” options in which case, the counter data will be logged after you start the performance counter log

Figure : Scheduling the Performance coutner log operation

- Click on the “General” tab and specify the interval for gathering counter data

Figure : Setting counter sample interval

- Press “OK” and start performance counter log by selecting the counter log and clicking start. When done, stop the counter log.

Figure : Starting the Performance counter logging

- For viewing log data close and open the Performance monitor tool again. Click on the view log icon (The icon in the red box) to view counter log. Click on the “Source” tab and select “Log files” radio button and add the log file to view by clicking on the “Add button”.

Figure : Viewing Performance coutner log

- By default, only three default counters are selected to be shown in the counter log output. Specify other counters (That were included while creating the Coutner log) by clicking on the “Data” tab and selecting the desired counters by clicking on the “Add” button.

Figure : Specifying coutners to view data in log

- Click “OK” button to view the performance counter log output in a graphical view

Figure : Viewing the Performance coutner log

Correlate Performance counter log and SQL Profiler trace for better investigation

The SQL Profiler can give you information about the long running queries, but, it cannot provide you with the context information to explain the reason for long query execution time.

On the other hand, the Performance monitor tool gives you statistics regarding the individual component’s performance (Context information) but, it does not give you information regarding the query execution time.

So, by combining the performance counter log with the SQL Profiler trace you can get the complete picture while diagnosing performance problems in SQL Server.

Correlating these two things serve another important purpose also. If the same query takes longer time in production server to execute, but, takes shorter time in test server, that indicates the test server may not have the same amount of load, environment and query execution context as the production server has. So, to diagnose the performance problem, you need a way to simulate the Production server’s query execution context in the Test server somehow. You can do this by correlating the SQL Profiler trace at the Test server with the Performance counter log that is taken at the Production server (Obviously, the SQL Profiler trace and Performance counter log that are taken within a same time period can only be correlated).

Correlating these two tool’s output can help you identifying the exact root cause of the performance problem. For example, you might find that each time the query takes 10 seconds to execute in the Production server, the CPU utilization reaches up to 100%. So, instead of trying to tune the SQL, you should investigate the reason why the CPU utilization rises up to 100% to optimize the query performance.

Follow these steps to correlate the SQL Profiler trace with the Performance counter log

- Create a Performance Counter log by incorporating the following common performance counters. Specify “Manual” option for starting and stopping the counter log.

–Network Interface\Output Queue length

–Processor\%Processor Time

–SQL Server:Buffer Manager\Buffer Cache Hit Ratio

–SQL Server:Buffer Manager\Page Life Expectancy

–SQL Server:SQL Statistics\Batch Requests/Sec

–SQL Server:SQL Statistics\SQL Compilations

–SQL Server:SQL Statistics\SQL Re-compilations/Sec

Create the performance counter log, but, don’t start it.

- Using the SQL Profiler, create a trace using the TSQL Duration template (For simplicity). Add “Start Time” and “End Time” column to the trace and Start the Profiler trace and the Performance counter log created in the previous step at the same time.

- When enough tracing has been done, stop both SQL Profiler trace and the Performance counter log at the same time. Save the SQL Profiler trace as a .trc file in the file system.

- Close the SQL Profiler trace window and open the trace file again with the Profiler s(.trc file) that was saved in the previous step (Yes, you have to close the Profiler trace and open the trace file again, otherwise, you won’t get the “Import Performance Data” option enabled. This looks like a bug in the Management Studio). Click on the “File->Import Performance Data” to correlate the Performance Counter log with the SQL Profiler trace. (If the Import Performance Data option is disabled, something is wrong and review your steps from the beginning). A file browser window will appear and select the Performance counter log file in the file system that is to be correlated.

- A window will appear to select the counters to correlate. Select all counters and press “OK”. You will be presented with a screen like the below that is the correlated output of SQL Profiler trace and Performance Counter log.

Figure : Correlated output of SQL Profiler and Performance Monitor tool

- Click on a particular TSQL in the Profiler trace output (In the upper part of the window). You’ll see that, a Red vertical bar will be set in the Performance counter log output to indicate the particular counter statistics when that particular query was being executed. Similarly, click on the Performance counter log output any where you see a certain performance counter’s value is high (Or, above the normal value). You’ll see that, the corresponding TSQL that was being executed on the database server will be highlighted in the SQL Profiler trace output.

I bet, you’ll surely find correlating these two tools output extremely interesting and handy.

Last words

There are a bunch of tools and techniques available for diagnosing performance problems in SQL Server. For example, you may like to review the SQL Server log file when any such problems are reported. Also, you may like to use the Database Tuning Advisor (DTA) for getting tuning suggestions for optimizing the database. Whatever the tool you use, you need to be able to take a deep look into the internal details to understand what’s going on behind the seen. Once you identify the actual cause of the performance problem, solution is the easiest part most of the cases.

I assume we have sufficient knowledge on diagnosing performance problems in SQL Server so far, along with the optimization steps that we’ve gone through. We are now heading towards the last part of this series of articles. Our optimization mission is going to be ended in the following next article:

"Top 10 steps to optimize data access in SQL Server. Part V (Optimize database files and apply partitioning)"

All's well that ends well. Hope to see you there!

License

This article, along with any associated source code and files, is licensed under The Code Project Open License (CPOL)

About the Author

| M.M.Al-Farooque Shubho Member |

A passionate software developer who loves to think, learn and observe the world around. Working in the .NET based software application development for quite a few years. My LinkedIn profile will tell you more about me. Have a look at My other CodeProject articles. Learn about my visions, thoughts and findings at My Blog. Awards: Prize winner in Competition "Best Asp.net article of May 2009" Prize winner in Competition "Best overall article of May 2009" Prize winner in Competition "Best overall article of April 2009"

|

[SQL]优化SQL Server数据访问10个步骤三

Introduction

Hello again!

We are in the process of optimizing an SQL Server database, and so far we have done lots of things. We applied indexing in our database tables and then re-factored the TSQL’s to optimize the data access routines. If you are wondering where we did all these and what are the things we have exactly done, take a look at the following articles in this series:

Top 10 steps to optimize data access in SQL Server. Part I (Use Indexing)

Top 10 steps to optimize data access in SQL Server. Part II (Re-factor TSQLs and apply best practices)

So, you did all these and still having performance problems with your database? Let me tell you one thing. Even after you have applied proper indexing along with re-factoring your TSQLs with best practices, some data access routines might still be there, which would be expensive, in terms of their execution time. There must have been some smart ways to deal with these.

Yes there are. SQL server offers you some rich indexing techniques that you might have not used earlier. These could surprise you with the performance benefits they possibly offer. Let us start implementing those advanced indexing techniques:

Step6 : Apply some advanced indexing techniques

Implement computed columns and create index on these

You might have written application codes where you select a result set from the database, and, do a calculation for each rows in the result set to produce the ultimate information to show in the output. For example, you might have a query that retrieves Order information from the database and in the application you might have written codes to calculate the total Order prices by doing arithmetic operations on Product and Sales data). But, why don’t you do all these processing in the database?

Take a look at the following figure. You can specify a database column as a “Computed column” by specifying a formula. While your TSQL includes the computed column in the select list, the SQL engine will apply the formula to derive the value for this column. So, while executing the query, the database engine will calculate the Order total price and return the result for the computed column.

Figure : Computed Column

Sounds good. Using a computed column in this way would allow you to do the entire calculation in the back-end. But sometimes, this might be expensive if the table contains large number of rows and the computed column. The situation might get worse if the computed column is specified in the Where clause in a Select statement. In this case, to match the specified value in the Where clause, the database engine has to calculate computed column’s value for each row in the table. This is a very inefficient process because it always requires a table or full clustered index scan.

So, we need to improve performance on computed columns. How? The solution is, you need to create index on the computed columns. When an index is built on a computed column, SQL Server calculates the result in advance, and builds an index over them. Additionally, when the corresponding column values are updated (That the computed column depends on), the index values on computed column are also updated. So, while executing the query, the database engine does not have to execute the computation formula for every row in the result set. Rather, the pre-calculated values for the computed column are just get selected and returned from the index. As a result, creating index on computed column gives you excellent performance boost.

Note : If you want to create index on a computed column, you must make sure that, the computed column formula does not contain any “nondeterministic” function (For example, getdate() is a nondeterministic function because, each time you call it, it returns a different value).

Create "Indexed Views"

Did you know that you can create indexes on views (With some restrictions)? Well, if you have come this far, let us learn the indexed Views!

Why do we use Views?

As we all know, Views are nothing but compiled Select statements residing as the objects in the database. If you implement your common and expensive TSQLs using Views, it’s obvious that, you can re-use these across your data access routines. Doing this will enable you to join the Views with the other tables/views to produce an output result set, and, the database engine will merge the view definition with the SQL you provide, and, will generated an execution plan to execute. Thus, sometimes Views allow you to re-use common complex Select queries across your data access routines, and also let the database engine re-use execution plans for some portion of your TSQLs.

Take my words. Views don’t give you any significant performance benefits. In my early SQL days, when I first learned about views, I got exited thinking that, Views were something that “remembers” the result for the complex Select query it is built upon. But, soon I was disappointed to know that, Views are nothing but compiled queries, and Views just can’t remember any result set. (Poor me! I can bet, many of you got the same wrong idea about Views, in your first SQL days).

But now, I may have a surprise for you! You can do something on a View so that, it can truly “remembers” the result set for the Select query it is compost of. How? It’s not hard; you just have to create indexes on the View.

Well, if you apply indexing on a View, the View becomes an “Indexed view”. For an indexed View, the database engine processes the SQL and stores the result in the data file just like a clustered table. SQL Server automatically maintains the index when data in the base table changes. So, when you issue a Select query on the indexed View, the database engine simply selects values from an index which obviously performs very fast. Thus, creating indexes on views gives you excellent performance benefits.

Please note that, nothing comes free. As creating Indexed Views gives you performance boost, when data in the base table changes, the database engine has to update the index also. So, you should consider creating indexed Views when the view has to process too many rows with aggregate function, and when data and the base table do not change often.

How to create indexed View?

- Create/modify the view with specifying the SCHEMABINDING option

Collapse

Create VIEW dbo.vOrderDetails WITH SCHEMABINDING AS Select…

- Create a unique clustered index on the View

- Create non-clustered index on the View as required

Wait! Don’t get too much exited about indexed Views. You can’t always create indexes on Views. Following are the restrictions:

- The View has to be created with SCHEMABINDING option. In this case, the database engine will not allow you to change the underlying table schema.

- The View cannot contain any nondeterministic function, DISTINCT clause and subquery.

- The underlying tables in the View must have clustered index (Primary keys)

So, try finding the expensive TSQLs in your application that are already implemented using Views, or, that could be implemented using Views. Try creating indexes on these Views to boost up your data access performance.

Create indexes on User Defined Functions (UDF)

Did you know this? You can create indexes on User Defined Functions too in SQL Server. But, you can’t do this in a straight-forward way. To create index on a UDF, you have to create a computed column specifying an UDF as the formula and then you have to create index on the computed column field.

Here are the steps to follow:

- Create the function (If not exists already) and make sure that, the function (That you want to create index on) is deterministic. Add SCHEMABINDING option in the function definition and make sure that there is no non-deterministic function/operator (getdate(), or, distinct etc) in the function definition.

For example,

Collapse

Create FUNCTION [dbo.ufnGetLineTotal] ( -- Add the parameters for the function here @UnitPrice [money], @UnitPriceDiscount [money], @OrderQty [smallint] ) RETURNS money WITH SCHEMABINDING AS BEGIN return (((@UnitPrice*((1.0)-@UnitPriceDiscount))*@OrderQty)) END

- Add a computed column in your desired table and specify the function with parameters as the value of the computed column.

Collapse

Create FUNCTION [dbo.ufnGetLineTotal] ( -- Add the parameters for the function here @UnitPrice [money], @UnitPriceDiscount [money], @OrderQty [smallint] ) RETURNS money WITH SCHEMABINDING AS BEGIN return (((@UnitPrice*((1.0)-@UnitPriceDiscount))*@OrderQty)) END

Figure : Specifying UDF as computation formula for computed column

- Create an index on the computed column

We already have seen that we can create index on computed columns to retrieve faster results on computed columns. But, what benefit could be achieved by using UDF in the computed columns and creating index on those?

Well, doing this would give you a tremendous performance benefit when you include the UDF in a query, especially if you use UDFs in the join conditions between different tables/views. I have seen lots of join queries written using UDFs in the joining conditions. I’ve always thought UDFs in join conditions are bound to be slow (If number of results to process is significantly large) and there has to be a way to optimize it. Creating indexes on functions in the computed columns is the solution.

Create indexes on XML columns

Create indexes on XML columns if there is any. XML columns are stored as binary large objects (BLOBs) in SQL server (SQL server 2005 and later) which can be queried using XQuery, but querying XML data types can be very time consuming without an index. This is true especially for large XML instances because SQL Server has to shred the binary large object containing the XML at runtime to evaluate the query.

To improve query performance on XML data types, XML columns can be indexed. XML indexes fall in two categories:

Primary XML indexes

When the primary index on XML column is created, SQL Server shreds the XML content and creates several rows of data that include information like element and attribute names, the path to the root, node types and values, and so on. So, creating the primary index enable the SQL server to support XQuery requests more easily.

Following is the syntax for creating primary XML index:

Create PRIMARY XML INDEX

index_name

ON <object> ( xml_column )

Secondary XML indexes.

Creating the primary XML indexes improves XQuery performance because the XML data is shredded already. But, SQL Server still needs to scan through the shredded data to find the desired result. To further improve query performance, the secondary XML index should be created on top of primary XML indexes.

Three types of secondary XML indexes are there. These are:

- “Path” Secondary XML indexes: Useful when using the .exist() methods to determine whether a specific path exists.

- “Value” Secondary XML indexes: Used when performing value-based queries where the full path is unknown or includes wildcards.

- “Property” Secondary XML indexes: Used to retrieve property values when the path to the value is known.

Following is the syntax for creating the secondary XML indexes

Create XML INDEX

index_name

ON <object> ( xml_column )

USING XML INDEX primary_xml_index_name

FOR { VALUE | PATH | PROPERTY }

Please note that, the above guidelines are the basics. But, creating indexes blindly on each and every tables on the mentioned columns may not always result in performance optimization, because, sometimes, you may find that, creating indexes on some particular columns in some particular tables resulting in making the data insert/update operations in that table slower (Particularly, if the table has a low selectivity on a column)., Also if the table is a small one containing small number of rows (Say, <500), creating index on the table might in turn increase the data retrieval performance (Because, for smaller tables, a table scan is faster). So, we should be judicious while determining the columns to create indexes on.

Step7:Apply de-normalizations, use history tables and pre-calculated columns

De-normalization

If you are designing a database for an OLTA system (Online Transaction Analytical system that is mainly a data warehouse which is optimized for read-only queries), you can (and, should) apply heavy de-normalizing and indexing in your database. That is, same data will be stored across different tables, but, the reporting and data analytical queries would run very fast on these kinds of databases.

But, if you are designing a database for an OLTP system (Online Transaction Processing System that is mainly a transactional system where mostly data update operations take place (That is, Insert/Update/Delete operations which we are used to work with most of the times), you are advised to implement at least 1st, 2nd and 3rd Normal forms so that, you can minimize data redundancy and thus, minimize data storage and increase manageability.

Despite the fact that we should apply normalizations in an OLTP system, we usually have to run lots of read operations (Select queries) on the database. So, after applying all optimization techniques so far, if you find that some of your data retrieval operations still not performing efficiently, you need to consider applying some sort of de-normalizations. So, the question is, how should you apply de-normalization and why this would improve performance?

Let us see a simple example to find the answer

Let’s say we have two tables OrderDetails(ID,ProductID,OrderQty) and Products(ID,ProductName) that stores order Detail information and Product Information respectively. Now to select the Product names with their ordered quantity for a particular order, we need to issue the following query that requires joining the OrderDetails and Products table.

Select Products.ProductName,OrderQty

FROM orderDetails INNER JOIN Products

ON orderDetails.ProductID = Products.ProductID

Where SalesOrderID = 47057

Now, if these two tables contain huge number of rows, and, if you find that, the query is still performing slowly even after applying all optimization steps, you can apply some de-normalization as follows:

- Add the column ProductName to the OrderDetails table and populate the ProductName column values

- Rewrite the above query as follows:

Collapse

Select ProductName,OrderQty FROM orderDetails Where SalesOrderID = 47057

Please note that, after applying de-normalization in the OrderDetails table, you no longer need to join the OrderDetails table with the Products table to retrieve Product names and their ordered quantity. So, while executing the SQL, the execution engine does not have to process any joining between the two tables. So, the query performs relatively faster.

Please note that, in order to improve the select operation’s performance, we had to do a sacrifice. The sacrifice was, we had to store the same data (ProductName) in two places (In OrderDetails and Products Table). So, while we insert/update the ProductName field in Products table, we also have to do the same in the OrderDetails Table. Additionally, doing this de-normalization will increase the overall data storage.

So, while de-normalizing, we have to do some trade-offs between the data redundancy and select operation’s performance. Also, we have to re-factor some of our data insert/update operations after applying the de-normalization. Please be sure to apply de-normalization only if you have applied all other optimization steps and yet to boost up the data access performance. Also, make sure that you don’t apply heavy de-normalizations so that, your basic data design does not get destroyed. Apply de-normalization (When required) only on the key tables that are involved in the expensive data access routines.

History tables

In your application if you have some data retrieval operations (Say, reporting) that periodically runs on a time period, and, if the process involves tables that are large in size having normalized structure, you can consider moving data periodically from your transactional normalized tables into a de-normalized heavily indexed single history table. You also can create a scheduled operation in your database server that would populate this history table on a specified time each day. If you do this, the periodic data retrieval operation than has to read data only from a single table that is heavily indexed, and, the operation would perform a lot faster.

For example, let’s say a chain store has a monthly sales reporting process that takes 3 hours to complete. You are assigned to minimize the time it takes, and to do this you can follow these steps (Along with performing other optimization steps):

- Create a history table with de-normalized structure and heavy indexing to store sales data.

- Create a scheduled operation in SQL server that runs each 24 hours interval (Midnight) and specify an SQL for the scheduled operation to populate the history table from the transactional tables.

- Modify your reporting codes so that if reads data from the history table now.

Creating the scheduled operation

Follow these simple steps to create a scheduled operation in SQL Server that periodically populates a history table on a specified schedule.

- Make sure that, SQL Server Agent is running. To do this, launch the SQL Server Configuration Manager, click on the SQL Server 2005 Services and start the SQL Server Agent by right clicking on it.

Figure : Starting SQL Server Agent Service

- Expand SQL Server Agent node in the object explorer and click on the “Job” node to create a new job. In the General tab, provide job name and descriptions.

Figure : Creating a new Job

- On the “Steps” tab, click on the “New” button to create a new job step. Provide a name for the step and also provide TSQL (That would load the history table with the daily sales data) along with providing Type as “Transact-SQL script(T-SQL)”. Press “OK” to save the Step.

Figure : Job step to load daily sales data on history table

- Go to the “Schedule” tab and click on “New” button to specify a job schedule.

Figure : Specifying job schedule.

- Click the “OK” button to save the schedule and also to apply the schedule on the specified job.

Perform expensive calculations in advance in data Insert/Update, simplify Select query

Naturally, most of the cases in your application you will see that data insert/update operations occur one by one, for each record. But, data retrieval/read operations involve multiple records at a time.

So, if you have a slowly running read operation (Select query) that has to do complex calculations to determine a resultant value for each row in the big result set, you can consider doing the following:

- Create an additional column in a table that will contain the calculated value

- Create a trigger for Insert/Update events on this table and calculate the value there using the same calculation logic that was in the select query earlier. After calculation, update the newly added column value with the calculated value.

- Replace the existing calculation logic from your select query with the newly created field

After implementing the above steps, the insert/update operation for each record in the table will be a bit slower (Because, the trigger will now be executed to calculate a resultant value), but, the data retrieval operation should run faster than previous. The reason is obvious, while the Select query executes, the database engine does not have to process the expensive calculation logic any more for each row.

What’s next?

I wish you have enjoyed all the optimization steps done so far. We have gone through indexing, refactoring the TSQLs, applying some advanced indexing techniques, de-normalizing portion of the database and using History tables to speed up our data access routines. Having done all of the above steps should bring your data access operations to a satisfactory level, but, we are not satisfied yet (Are we?).

So, we are going to do many more things to do further optimizations in our data access operations. Let's go through the next article in this series:

History

First version:1st May

License

This article, along with any associated source code and files, is licensed under The Code Project Open License (CPOL)

About the Author

| M.M.Al-Farooque Shubho Member |

A passionate software developer who loves to think, learn and observe the world around. Working in the .NET based software application development for quite a few years. My LinkedIn profile will tell you more about me. Have a look at My other CodeProject articles. Learn about my visions, thoughts and findings at My Blog. Awards: Prize winner in Competition "Best Asp.net article of May 2009" Prize winner in Competition "Best overall article of May 2009" Prize winner in Competition "Best overall article of April 2009"

|

[SQL]优化SQL Server数据访问10个步骤一二

Introduction

“It’s been months since you and your team have developed and deployed a site successfully in the internet. You have a pretty satisfied client so far as the site was able to attract thousands of users to register and use the site within a small amount of time. Your client, management, team and you – everybody is happy.

Life is not a bed of roses. As the number of users in the site was started growing at a rapid rate day by day, problem started occurring. E-mails started to arrive from the client complaining that the site is performing too slowly (Some of them ware angry mails). The client claimed that, they started losing users.

You started investigating the application. Soon you discovered that, the production database was performing extremely slowly when application was trying to access/update data. Looking into the database you found that the database tables have grown large in size and some of them were containing hundreds of thousands of rows. The testing team performed a test on the production site and they found that the order submission process was taking 5 long minutes to complete whereas it used to take only 2/3 seconds to complete in the test site before production launch”.

This is the same old story for thousands of application projects developed worldwide. Almost every developer including me has taken part in the story sometime in his/her development life. So, I know why such situation took place, and, I can tell you what to do to overcome this.

Let’s face it. If you are part of this story, you must have not written the data access routines in your application in the best possible way and it’s time to optimize those now. I want to help you doing this by sharing my data access optimization experiences and findings with you in this series of articles. I just hope, this might enable you to optimize your data access routines in the existing systems, or, to develop the data access routines in the optimized way in your future projects.

Scope

Please note that, the primary focus of these series of articles is “Data access performance optimization in transactional (OLTP) SQL Server databases”. But, most of the optimization techniques are roughly the same for other database platforms.

Also, the optimization techniques I am going to discuss are applicable for the software application developers only. That is, as a developer, I’ll focus on the issues that you need to follow to make sure that you have done everything that you could do to optimize the data access codes you have written or you are going to write in future. The Database Administrators (DBA) also has great roles to play in optimizing and tuning the database performance. But, optimization scopes that fall into a DBA’s area are out of scope for these articles.

We have a database to optimize, let’s start it!

When a database based application performs slowly, there is a 90% probability that, the data access routines of that application are not optimized, or, not written in the best possible way. So, you need to review and optimize your data access/manipulation routines for improving the overall application’s performance.

So, let us start our optimization mission in a step-by-step process:

Step1: Apply proper indexing in the table columns in the database

Well, some could argue whether implementing proper indexing should be the first step in performance optimization process in the database. But, I would prefer applying indexing properly in the database in the first place, because of following two reasons:

- This will allow you to improve the best possible performance in the quickest amount of time in a production system.

- Applying/creating indexes in the database will not require you to do any application modification and thus will not require any build and deployment.

Of course, this quick performance improvement can be achieved if you find that, indexing is not properly done in the current database. However, if indexing is already done, I would still recommend you to go through this step.

What is indexing?

I believe, you know what indexing is. But, I’ve seen many people being unclear on this. So, let us try to understand indexing once again. Lets us read a small story.

Long ago there was a big library in an ancient city. It had thousands of books, but, the books ware not arranged in any order in the book shelves. So, each time a person asked for a book to the librarian, the librarian had no way but to check every book to find the required book that the person wants. Finding the desired book used to take hours for the librarian, and, most of the times the persons who ask for books had to wait for a long time.

[Hm..seems like a table that has no primary key. So, when any data is searched in the table, the database engine has to scan through the entire table to find the corresponding row, which performs very slow.]

Life was getting miserable for that librarian as the number of books and persons asking for books were increasing day by day. Then one day, a wise guy came to the library, and, seeing the librarian’s measurable life, he advised him to number each book and arrange these in the book shelves according to their numbers. “What benefit would I get?” Asked the librarian. The wise guy answered, “well, now if somebody gives you a book number and ask for that book, you will be able to find the shelves quickly that contains the book’s number, and, within that shelve, you can find that book very quickly as these are arranged according to their number”.

[Numbering the books sounds like creating primary key in a database table. When you create a primary key in a table, a clustered index tree is created and all data pages containing the table rows are physically sorted in the file system according to their primary key values. Each data page contains rows which are also sorted within the data page according to their primary key values. So, each time you ask any row from the table, the database server finds the corresponding data page first using the clustered index tree (Like, finding the book shelve first) and then finds the desired row within the data page that contains the primary key value (Like, finding the book within the shelve)]

“This is what I exactly need!” The excited librarian instantly starts numbering the books and arranging these across different book shelves. He spent a whole day to do this arrangement, but, at the end of the day, he tested and found that that a book now could be found using the number within no time at all! The librarian was extremely happy.

[That’s exactly what happens when you create a primary key in a table. Internally, a clustered index tree is created, and, the data pages are physically sorted within the data file according to the primary key values. As you can easily understand, only one clustered index can be created for a table as the data can be physically arranged only using one column value as the criteria (Primary key). It’s like the books can only be arranged using one criterion (Book number here)]

Wait! The problem was not completely solved yet. In the very next day, a person asked a book by the book’s name (He didn’t have the book’s number, so, all he had the book’s name). The poor librarian had no way but to scan all numbered book from 1 to N to find the one the person asked for. He found the book in the 67th shelves. It took 20 minutes for the librarian to find the book. Earlier, he used to take 2-3 hours to find a book when these were not arranged in the shelves, so, that’s an improvement still. But, comparing to the time to search a book using it’s number (30 seconds), this 20 minute seemed to be a very high amount of time to the librarian. So, he asked the wise man how to improve on this.

[This happens when you have a Product table where you have a primary key ProductID, but, you have no other index in the table. So, when a product is to be searched using the Product Name, the database engine has no way but to scan all physically sorted data pages in the file to find the desired named book]

The wise man told the librarian “Well, as you already have arranged your books using their serial numbers, you cannot re-arrange these. So, better create a catalog or index where you will have all the book’s names and their corresponding serial numbers. But, in this catalog, arrange the book names in their alphabetic number and group the book names using each alphabet so that, if any one wants to find a book named “Database Management System”, you just follow these steps to find the book

- Jump into the section “D” of your “Book name “catalog” and find the book name there

- Read the corresponding serial number of the book and find the book using the serial number (You already know how to do this)

“You are a genius! Exclaimed the librarian. Spending some hours he immediately created the “Book name” catalog and on a quick test he found that he only required 1 minute (30 seconds to find the book’s serial number in the “Book name” catalog and another 30 seconds to find the book using the serial number) to find a book using the book name.

The librarian thought that, people might ask for books using several other criteria like book name and/or author’s name etc. so, he created another similar catalog for author names and after creating these catalogs the librarian could find any book using the some common book finding criteria (Serial number, Book name, Author’s name) within a maximum 1 minute of time. The miseries of the librarian ended soon and lots of persons started gathering at the library for books as they could get the book really fast and the library became very popular.

The librarian started passing his life happily ever after. The story ends.

By this time, I am sure you have understood what indexes really are, why they are important and what their inner workings are. For example if we have a “Products” table, along with creating a clustered index (That is automatically created when creating the primary key in the table), we should create a non-clustered index on the ProductName column. If we do this, the database engine creates an index tree for the non-clustered index (Like, the “book name” catalog in the story) where the product names will be sorted within the index pages. Each index page will contain some range of product names along with their corresponding primary key values. So, when a Product is searched using the product name in the search criteria, the database engine will first seeks the non-clustered index tree for Product name to find the primary key value of the book. Once found, the database engine then searches the clustered index tree with the primary key to find the row for the actual book that is being searched.

Following is how an index tree looks like:

Figure : Index tree structure

This is called a B+ Tree (Balanced tree). The intermediate nodes contain range of values and direct the SQL engine where to go while searching for a specific index value in the tree starting from the root node. The leaf nodes are the nodes which contain the actual index values. If this is a clustered index tree, the leaf nodes are the physical data pages. If this is a non-clustered index tree, the leaf nodes contain index values along with clustered index keys (Which the database engine uses to find the corresponding row in the clustered index tree.

Usually, finding a desired value in the index tree and jumping to the actual row from there takes an extremely small amount of time for the database engine. So, indexing generally improves the data retrieval operations.

So, time to apply indexing in your database to retrieve results fast!

Follow these steps to ensure proper indexing in your database

Make sure that every table in your database has a primary key.

This will ensure that every table has a Clustered index created (And hence, the corresponding pages of the table are physically sorted in the disk according to the primary key field). So, any data retrieval operation from the table using primary key, or, any sorting operation on the primary key field or any range of primary key value specified in the where clause will retrieve data from the table very fast.

Create non-clustered indexes on columns which are:

- Frequently used in the search criteria

- Used to join other tables

- Used as foreign key fields

- Of having high selectivity (Column which returns a low percentage (0-5%) of rows from a total number of rows on a particular value)

- Used in the orDER BY clause

- Of type XML (Primary and secondary indexes need to be created. More on this in the coming articles)

Following is an example of an index creation command on a table:

Create INDEX

NCLIX_OrderDetails_ProductID ON

dbo.OrderDetails(ProductID)

Alternatively, you can use the SQL Server Management Studio to create index on the desired table

Figure : Creating index using SQL Server Management Studio

Step2 : Create appropriate covering indexes

So, you have created all appropriate indexes in your database, right? Suppose, in this process you have created an index on a foreign key column (ProductID) in the Sales(SelesID,SalesDate,SalesPersonID,ProductID,Qty) table. Now, assuming that, the ProductID column is a “Highly selective” column (Selects less than 5% of the total number of rows rows using any ProductID value in the search criteria) , any Select query that reads data from this table using the indexed column (ProductID) in the where clause should run fast, right?

Yes, it does, comparing to the situation where no index created on the foreign key column (ProductID) in which case, a full table scan (scanning all related pages in the table to retrieve desired data). But, still, there is further scope to improve this query.

Let’s assume that, the Sales table contains 10,000 rows, and, the following SQL selects 400 rows (4% of the total rows)

Select SalesDate, SalesPersonID FROM Sales Where ProductID = 112

Let’s try to understand how this SQL gets executed in the SQL execution engine

- The Sales table has a non-clustered index on ProductID column. So, it “seeks” the non-clustered index tree for finding the entry that contains ProductID=112

- The index page that contains the entry ProductID = 112 also contains the all Clustered index keys (All Primary key values, that is SalesIDs, that have ProductID = 112 assuming that primary key is already created in the Sales table)

- For each primary key (400 here), the SQL server engine “seeks” into the clustered index tree to find the actual row locations in the corresponding page.

- For each primary key, when found, the SQL server engine selects the SalesDate and SalesPersonID column values from the corresponding rows.

Please note that, in the above steps, for each of the primary key entries (400 here) for ProductID = 112, the SQL server engine has to search the clustered index tree (400 times here) to retrieve the additional columns (SalesDate, SalesPersonID) in the query.

It seems that, along with containing clustered index keys (Primary key values) if the non-clustered index page could also contain two other column values specified in the query (SalesDate, SalesPersonID), the SQL server engine would not have to perform the step 3 and step 4 in the above steps, and, thus, would be able to select the desired results even faster just by “seeking” into the non clustered index tree for ProductID column, and, reading all three mentioned column values directly from that index page.

Fortunately, there is a way to implement this feature. This is what is called “Covered index”. You create “Covered indexes” in table columns to specify what are the additional column values the index page should store along with the clustered index key values (primary keys). Following is the example of creating a covered index on the ProductID column in Sales table:

Create INDEX NCLIX_Sales_ProductID--Index name

ON dbo.Sales(ProductID)--Column on which index is to be created

INCLUDE(SalesDate, SalesPersonID)--Additional column values to include

Please note that, Covered index should be created including a few columns that are frequently used in the select queries. Including too many columns in the covered indexes would not give you too much benefit. Rather, doing this would require too much memory to store all the covered index column values resulting in over consumption of memory and slow performance.

Use Database Tuning Advisor’s help while creating covered index

We all know, when an SQL is issued, the optimizer in the SQL server engine dynamically generates different query plans based on:

- Volume of Data

- Statistics

- Index variation

- Parameter value in TSQL

- Load on server

That means, for a particular SQL, the execution plan generated in the production server may not be the same execution plan that is generated in the test server, even though, the table and index structure is the same. This also indicates that, an index created in the test server might boost some of your TSQL performance in the test application, but, creating the same index in the production database might not give you any performance benefit in the production application! Why? Well, because, the SQL execution plans in the test environment utilizes the newly created indexes and thus gives you better performance. But, the execution plans that are being generated in the production server might not use the newly created index at all for some reasons (For example, a non-clustered index column is not “highly” selective in the production server database, which is not the case in the test server database).

So, while creating indexes, we need to make sure that, the index would be utilized by the execution engine to produce faster result. But, how can we do this?

The answer is, we have to simulate the production server’s load in the test server, and then need to create appropriate indexes and test those. Only then, if the newly created indexes improves performance in the test environment, these will most likely to improve performance in the production environment.

Doing this should be hard, but, fortunately, we have some friendly tools to do this. Follow these instructions:

- Use SQL profiler to capture traces in the production server. Use the Tuning template (I know, it is advised not to use SQL profiler in the production database, but, sometimes you have to use it while diagnosing performance problem in the production). If you are not familiar with this tool, or, if you need to learn more about profiling and tracing using SQL profiler, read http://msdn.microsoft.com/en-us/library/ms181091.aspx.